AME - Escuela de Ciencia de Datos

Septiembre 2019

Historias con Impacto a Través de la

Ciencia de Datos

Vianey Leos(-)Barajas

Depts of Forestry & Envir. Resources and Statistics

North Carolina State University

What is data science?

Maybe a combination of Statistics, Computer Science and Mathematics?

What is data science?

Maybe a combination of Statistics, Computer Science and Mathematics?

What about machine learning and big data?

What is data science?

Maybe a combination of Statistics, Computer Science and Mathematics?

What about machine learning and big data?

In this talk we'll ignore any pre-conceived notions of what data science is and focus on:

What is data science?

Maybe a combination of Statistics, Computer Science and Mathematics?

What about machine learning and big data?

In this talk we'll ignore any pre-conceived notions of what data science is and focus on:

<< how to tell stories with data >>

Historias con Impacto

Historias con Impacto

The biggest impact we can have is when we make discoveries about the world we live in.

Historias con Impacto

The biggest impact we can have is when we make discoveries about the world we live in.

Historias con Impacto

Something to always keep in mind: the stories we tell come from our perspectives, shaped by our experiences and driven by our personal choices. Being open and transparent about this is part of telling an impactful story.

Historias con Impacto

Something to always keep in mind: the stories we tell come from our perspectives, shaped by our experiences and driven by our personal choices. Being open and transparent about this is part of telling an impactful story.

Reproducible research:

Can anyone reproduce your workflow?

Do the results reproduce?

Data availability:

- Making the data available

Historias con Impacto

Something to always keep in mind: the stories we tell come from our perspectives, shaped by our experiences and driven by our personal choices. Being open and transparent about this is part of telling an impactful story.

Reproducible research:

Can anyone reproduce your workflow?

Do the results reproduce?

Data availability:

- Making the data available

Research that's accessible to everyone:

Why did the story turn out the way it did?

What was tried?

What failed?

Allowing insights into your research process.

Story Time

What Story Do We Want Tell?

This is usually the easier part -- there are so many cool questions we can try to answer with data!

Some examples:

- How do white sharks react to tourism boats?

- How can we personalize (clothing/videos/products)? Check out StitchFix's algorithms team.

How do we tell our story with data?

One of the hardest parts and one of the most important steps. Domain expertise comes in! [*]

Quantifying the behavior we want to observe. Evaluating and re-evaluating.

Is it feasible to collect that information?

What information can we not collect?

Re-examining our original question and re-formulating it with what's possible.

How do we tell our story with data?

One of the hardest parts and one of the most important steps. Domain expertise comes in! [*]

Quantifying the behavior we want to observe. Evaluating and re-evaluating.

Is it feasible to collect that information?

What information can we not collect?

Re-examining our original question and re-formulating it with what's possible.

Data are objective → FALSE

We make decisions about what data to use to answer questions, decisions about how to collect the data, etc. Let's fully embrace subjectivity in every step of the process.

How do we tell our story with data?

One of the hardest parts and one of the most important steps. Domain expertise comes in! [*]

Quantifying the behavior we want to observe. Evaluating and re-evaluating.

Is it feasible to collect that information?

What information can we not collect?

Re-examining our original question and re-formulating it with what's possible.

Data are objective → FALSE

We make decisions about what data to use to answer questions, decisions about how to collect the data, etc. Let's fully embrace subjectivity in every step of the process.

[*]Domain expertise is a must. Not using it can lead to extremely irresponsible practices.

Quantifying the behavior we want to observe

Real World (of Mexican sharks)

Full Video: Pelagios Kakunjá YouTube Channel

Source: Pelagios Kakunjá

Quantifying the behavior we want to observe

Real World (of Mexican sharks)

Full Video: Pelagios Kakunjá YouTube Channel

Source: Pelagios Kakunjá

Quantified World

May be able to detect the shark's presence (if tagged)

Can not record the presence of untagged animals

Surface currents may be available

Temperature

A lot of information can not be recorded

Quantifying the behavior we want to observe.

→ This sets in motion the type of analysis that will be used.

For instance, let's look at this question:

How do white sharks react to tourism boats?

Quantifying the behavior we want to observe.

→ This sets in motion the type of analysis that will be used.

For instance, let's look at this question:

How do white sharks react to tourism boats?

- What do we mean by react?

What type of behavior are we looking for?

Quantifying the behavior we want to observe.

→ This sets in motion the type of analysis that will be used.

For instance, let's look at this question:

How do white sharks react to tourism boats?

What do we mean by react?

What type of behavior are we looking for?We need to quantify their movements.

Whole body movement, longitude/latitude?

Quantifying the behavior we want to observe.

→ This sets in motion the type of analysis that will be used.

For instance, let's look at this question:

How do white sharks react to tourism boats?

What do we mean by react?

What type of behavior are we looking for?We need to quantify their movements.

Whole body movement, longitude/latitude?What was done: data (location) is collected approximately every 5-minutes.

Quantifying the behavior we want to observe.

→ This sets in motion the type of analysis that will be used.

For instance, let's look at this question:

How do white sharks react to tourism boats?

What do we mean by react?

What type of behavior are we looking for?We need to quantify their movements.

Whole body movement, longitude/latitude?What was done: data (location) is collected approximately every 5-minutes.

When we talk about 'white shark' behaviors for this project, we mean "movement patterns observed at a 5-minute temporal scale" based on positional data.

Quantifying the behavior we want to observe.

→ This sets in motion the type of analysis that will be used.

For instance, let's look at this question:

How do white sharks react to tourism boats?

What do we mean by react?

What type of behavior are we looking for?We need to quantify their movements.

Whole body movement, longitude/latitude?What was done: data (location) is collected approximately every 5-minutes.

When we talk about 'white shark' behaviors for this project, we mean "movement patterns observed at a 5-minute temporal scale" based on positional data.

Even the phrase "movement patterns" can be and is quite subjective.

White Shark Movements

Data collected by Alison Towner.

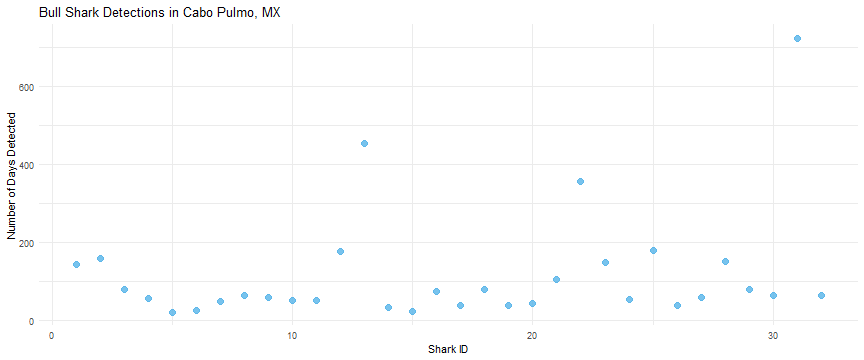

Bull Shark Movements

Work with Pelagios Kakunjá.

For more shark work: check out MigraMar and Alex Hearn.

Data Organization and Manipulation

Organization

Organizing and structuring your data set is one of the most important aspects in any project.

Manipulation

Writing scripts to go from the raw data set to the formatted data set for analysis. In R, take a look at the tidyverse.

What Next?

Once we've quantified the question we'd like to answer and collected data, exploratory data analysis can be done.

Lots and lots of plots...that tell our story.

Depending on what our story is so far, we'll attempt to match our data story with an appropriate model.

Keep in mind, what plots we make and what models we choose is also be subjective. We'll embrace it.

What models are seen as...

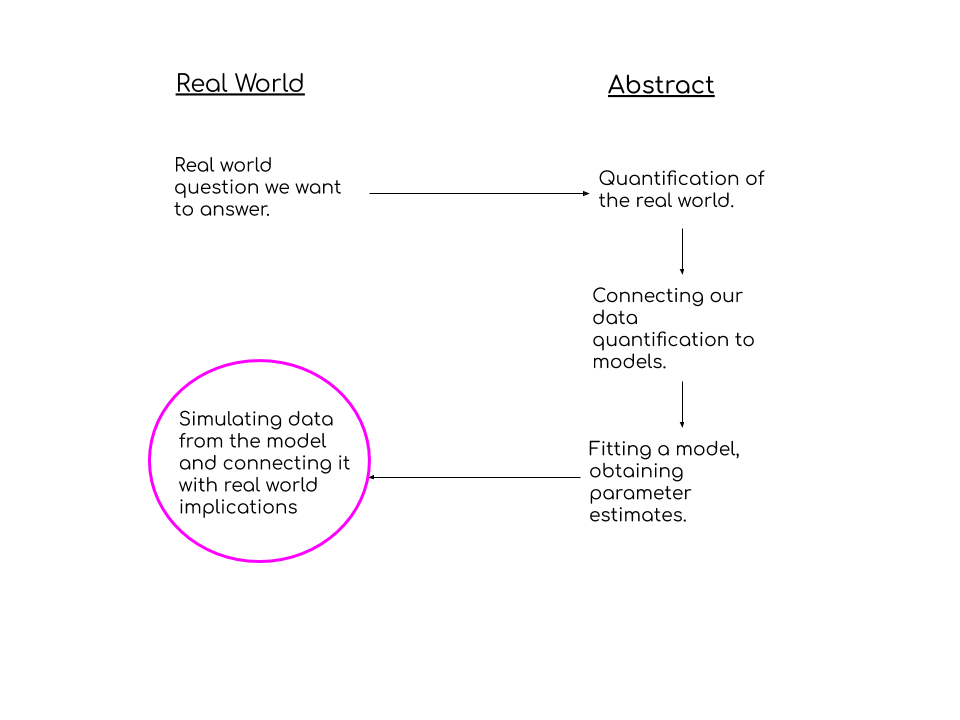

How we should think of models...

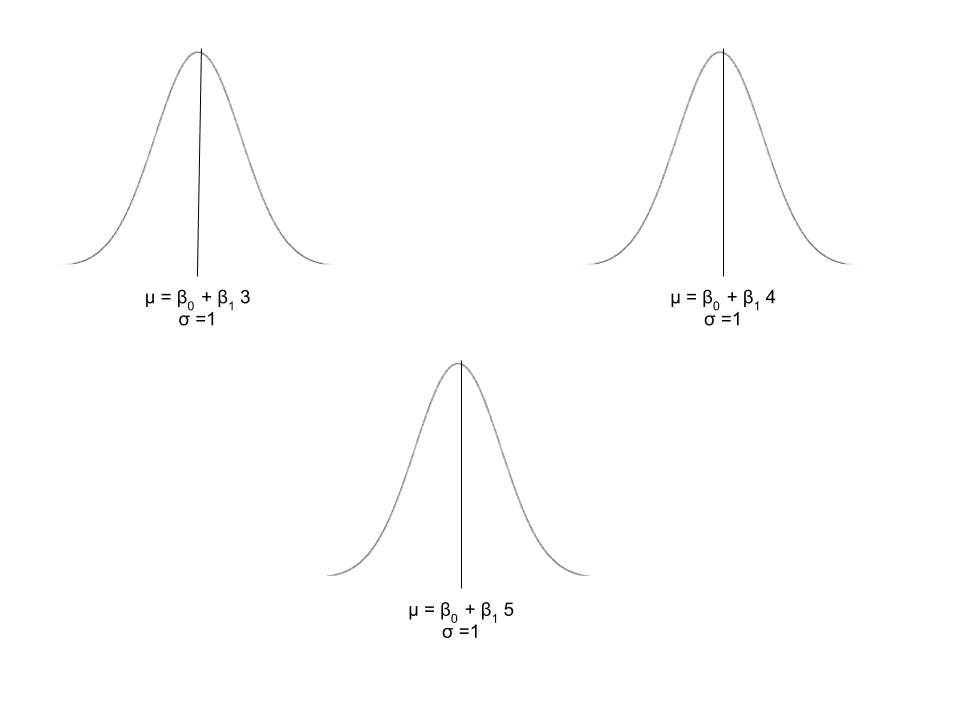

Our old friend: Simple Linear Regression

Basic setup:

y=β0+β1x+ϵ

ϵ∼N(0,σ2)

Our old friend: Simple Linear Regression

Basic setup:

y=β0+β1x+ϵ

ϵ∼N(0,σ2)

Altogether, we have

f(y|x)∼N(β0+β1x,σ2)

Our old friend: Simple Linear Regression

Basic setup:

y=β0+β1x+ϵ

ϵ∼N(0,σ2)

Altogether, we have

f(y|x)∼N(β0+β1x,σ2)

Intrepretation of the parameters β0,β1,σ2:

β0 = the expected value of y when x=0

β1 = the expected change in y when you increase the value of x by 1

σ2 = a measure of the variability around the mean ( β0+β1x )

The story of SLR

What does it actually mean?

The means under different values of x are connected (they're friends on a line.)

SLR is an abstract concept

Like all statistical models. One of the best ways to learn about a model is to simulate from it.

Deterministic (fixed, unknowns):

β0,β1

σ2

Stochastic (drawn from a distribution):

- ϵ∼N(0,σ2)

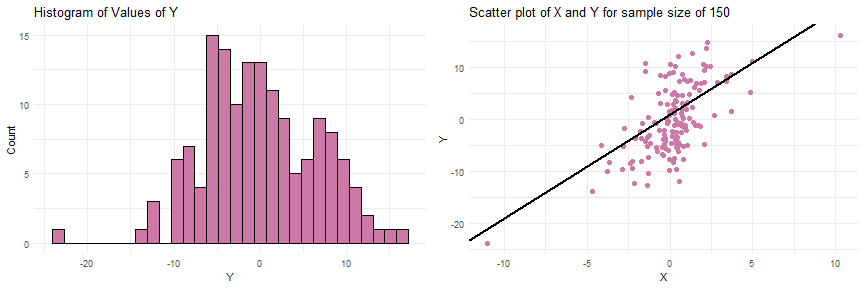

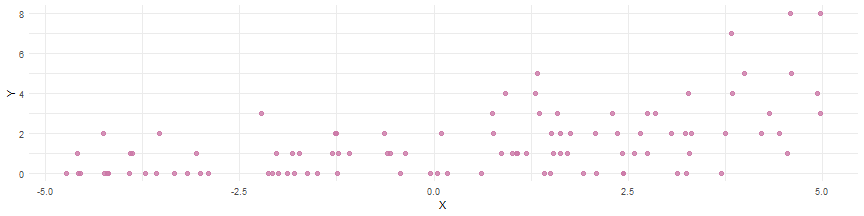

#R Codeset.seed(17)beta0 <- 1beta1 <- 2std.dev <- 5x <- rt(n = 150, df = 3)y <- beta0 + beta1*x + rnorm(n=150, mean = 0, sd=std.dev)Simulating Data from a SLR

#R Codeset.seed(17)beta0 <- 1beta1 <- 2std.dev <- 5x <- rt(n = 150, df = 3) y <- beta0 + beta1*x + rnorm(n=150, mean = 0, sd=std.dev)

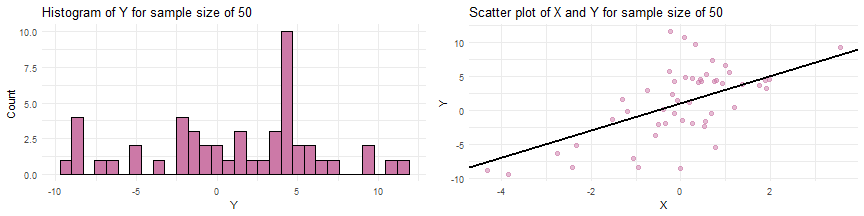

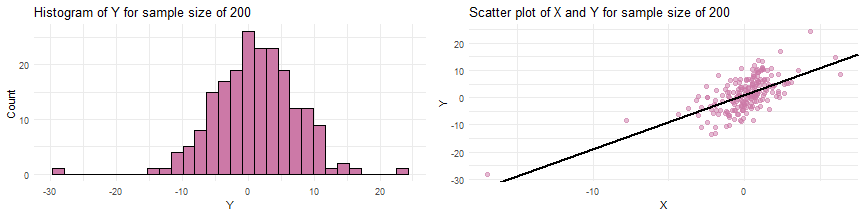

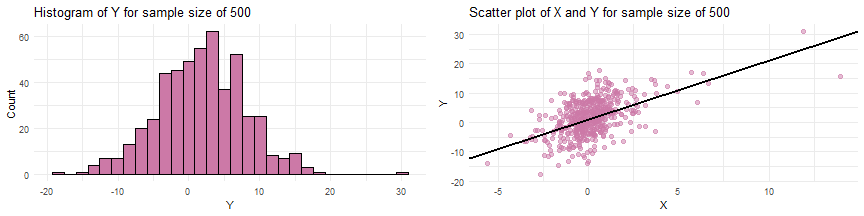

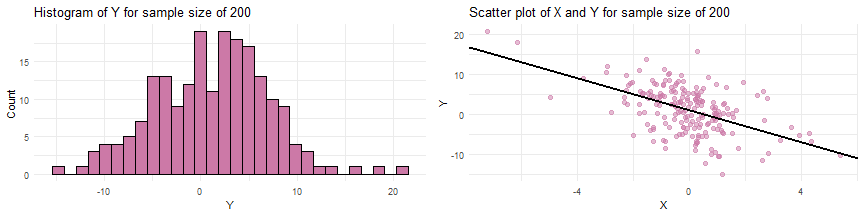

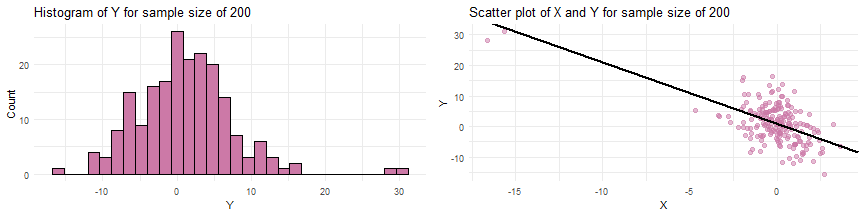

Simulating data from a SLR when the sample size N=50,200,500

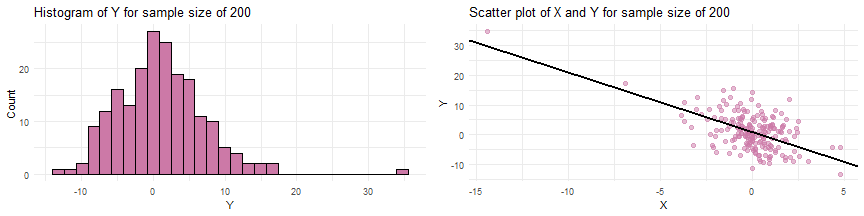

Simulating data from a SLR when the sample size of N=200 and parameter values of β0=1,β1=−2,σ=5

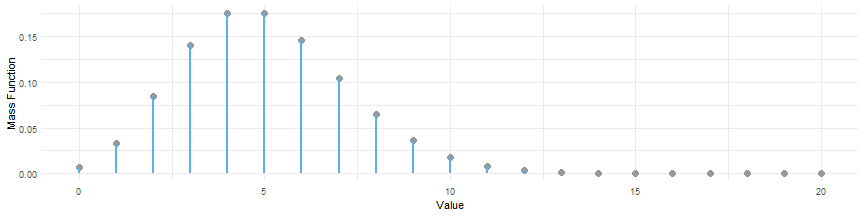

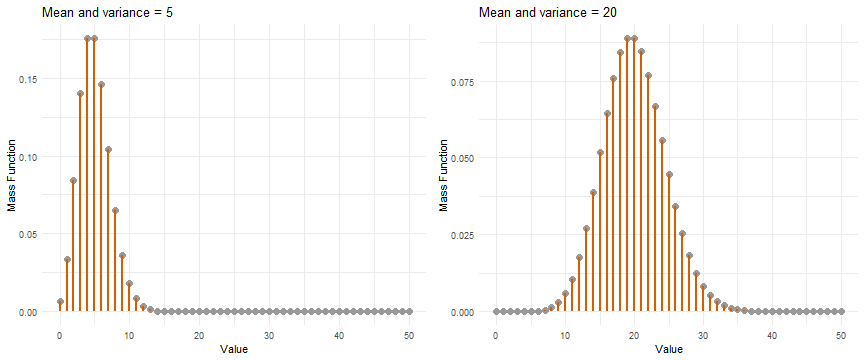

Generalized Linear Model: Poisson Log-Linear Model

Here, we imply that:

y|xi∼Poisson(λi)

log(λi)=β0+β1xi

A special property of the Poisson distribution is that E(y|xi)=Var(y|xi)=λi.

Poisson distribution with λ=5:

The story of the Poisson Log-Linear Model

For every value of x, we expect to see observations y that are generated according to the Poisson distribution with λx=eβ0+β1x.

Simulating from a Poisson Log-Linear Model

pbeta0 <- 0.1pbeta1 <- 0.2px <- runif(n=100, min=-5, max=5)xlambda <- exp(pbeta0 + pbeta1*px)py <- rpois(n=100, lambda=xlambda)

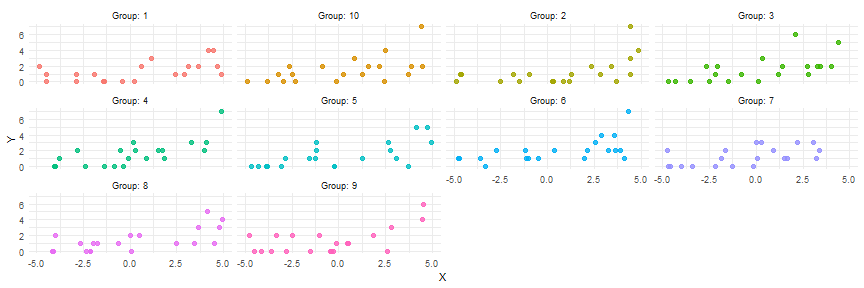

Hierarchical Model

One example

yij∼Poisson(λij)

log(λij)=β0,i+β1,ixij

β0,iiid∼N(μ0,σ20)

β1,iiid∼N(μ1,σ21)

Story

Similar to the Poisson Log-Linear Model from before, but now we assume that some parameters vary across groups. However(!), the parameters β0,i and β1,i are linked across groups!

Simulating from a Hierarchical Model

## 10 individualspb0h <- rnorm(n = 10, mean = 0.1, sd = 0.1)pb1h <- rnorm(n=10, mean=0.2, sd=0.1)pxh <- matrix(data=NA, nrow=10, ncol=20) pyh <- matrix(data=NA, nrow=10, ncol=20)for(j in 1:10){ pxh[j,] <- runif(n=20, min=-5, max=5) pyh[j,] <- rpois(n=20, lambda=exp(pb0h[j] + pb1h[j]*pxh[j,]))}

Simulation -- The Underused Tool

Advantages of simulation:

No data needed.

We can learn about what are models actually do, e.g. how different parameter values affect the outcome.

We build intuition about what different models imply.

Simulation -- The Underused Tool

Advantages of simulation:

No data needed.

We can learn about what are models actually do, e.g. how different parameter values affect the outcome.

We build intuition about what different models imply.

If after simulation it's hard to understand what these parameters mean in the overall context...

Simulation -- The Underused Tool

Advantages of simulation:

No data needed.

We can learn about what are models actually do, e.g. how different parameter values affect the outcome.

We build intuition about what different models imply.

If after simulation it's hard to understand what these parameters mean in the overall context...

we can always go back and do more simulations!

Simulation -- The Underused Tool

Advantages of simulation:

No data needed.

We can learn about what are models actually do, e.g. how different parameter values affect the outcome.

We build intuition about what different models imply.

If after simulation it's hard to understand what these parameters mean in the overall context...

we can always go back and do more simulations!

It'll be hard to interpret the parameters if we don't have a good idea of what they actually mean for our process.

Choosing candidate models

Remember: every model tells a story.

Choosing candidate models

Remember: every model tells a story.

How does the models' story tie into the story we want to tell?

Choosing candidate models

Remember: every model tells a story.

How does the models' story tie into the story we want to tell?

When we simulate data from various models, does it look like the data we have?

Fitting models to data

It's one thing to think about the wonderful stories that models to tell. And another thing to face the reality of trying to fit these models to data.

Fitting models to data

It's one thing to think about the wonderful stories that models to tell. And another thing to face the reality of trying to fit these models to data.

Some models are well-behaved with small data sets...

Fitting models to data

It's one thing to think about the wonderful stories that models to tell. And another thing to face the reality of trying to fit these models to data.

Some models are well-behaved with small data sets...

Some models are data hungry...

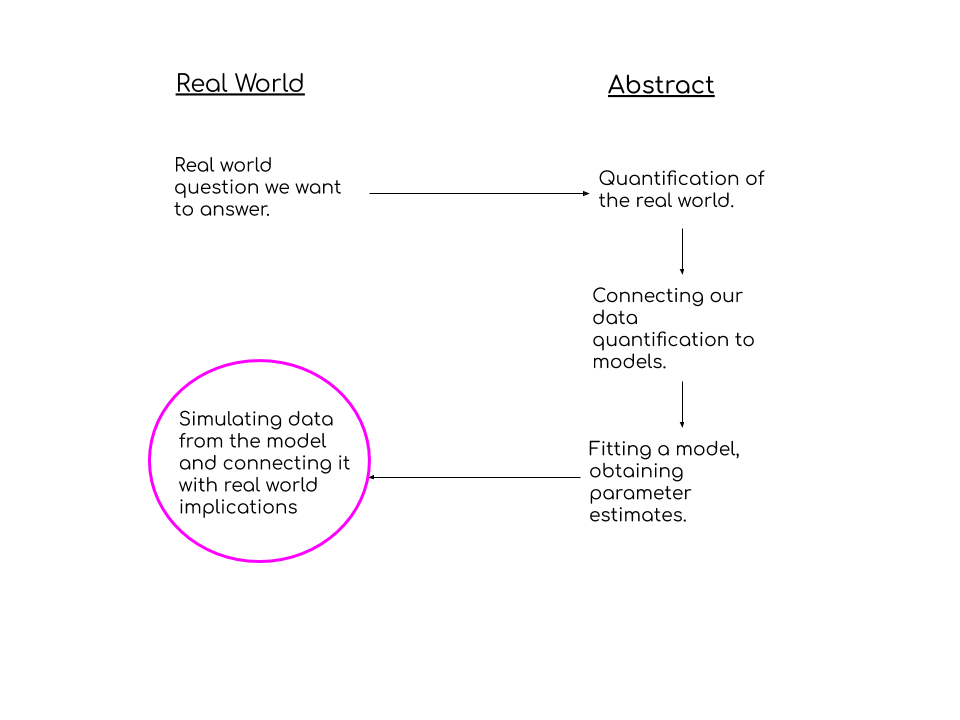

Back to Simulation

Even if your story matches perfectly with a model's story...a fairytale ending isn't certain.

Back to Simulation

Even if your story matches perfectly with a model's story...a fairytale ending isn't certain.

Models need a certain amount of information before they reveal secrets about the data...

(in proper statistical terms: estimability/identifiability)

Back to Simulation

Even if your story matches perfectly with a model's story...a fairytale ending isn't certain.

Models need a certain amount of information before they reveal secrets about the data...

(in proper statistical terms: estimability/identifiability)

Good practice:

- Put together information about your data:

What's the sample size? How many replicates are there? Number of individuals?

Back to Simulation

Even if your story matches perfectly with a model's story...a fairytale ending isn't certain.

Models need a certain amount of information before they reveal secrets about the data...

(in proper statistical terms: estimability/identifiability)

Good practice:

- Put together information about your data:

What's the sample size? How many replicates are there? Number of individuals?

- Simulate data from the model that matches your data specifics

Back to Simulation

Even if your story matches perfectly with a model's story...a fairytale ending isn't certain.

Models need a certain amount of information before they reveal secrets about the data...

(in proper statistical terms: estimability/identifiability)

Good practice:

- Put together information about your data:

What's the sample size? How many replicates are there? Number of individuals?

Simulate data from the model that matches your data specifics

Fit the model to the simulated data. What do you see? How much uncertainty is there in the parameter estimates?

Fitting a Model

Once we fit a model to our data, but not before constructing uncertainty intervals, we begin to examine the patterns that are revealed.

Fitting a Model

Once we fit a model to our data, but not before constructing uncertainty intervals, we begin to examine the patterns that are revealed.

- Can we interpret the parameters?

Fitting a Model

Once we fit a model to our data, but not before constructing uncertainty intervals, we begin to examine the patterns that are revealed.

Can we interpret the parameters?

Are the results inline with what was expected?

Fitting a Model

Once we fit a model to our data, but not before constructing uncertainty intervals, we begin to examine the patterns that are revealed.

Can we interpret the parameters?

Are the results inline with what was expected?

I'm sidestepping the idea of 'significance' on purpose. In favor of...

Fitting a Model

Once we fit a model to our data, but not before constructing uncertainty intervals, we begin to examine the patterns that are revealed.

Can we interpret the parameters?

Are the results inline with what was expected?

I'm sidestepping the idea of 'significance' on purpose. In favor of...

How do the values of my parameters jointly tell the story?

Fitting a Model

Once we fit a model to our data, but not before constructing uncertainty intervals, we begin to examine the patterns that are revealed.

Can we interpret the parameters?

Are the results inline with what was expected?

I'm sidestepping the idea of 'significance' on purpose. In favor of...

How do the values of my parameters jointly tell the story?

For Bayesian inference, check out Stan.

Assessing/Interpreting a Model

Mostly done in the context of 'posterior predictive checks' when conducting Bayesian inference, this idea falls into the class of simulation-based model assessment.

Simulate data from the fitted model: yrep

Draw values of the parameters θi from their distributions

Plug in θi into the model to simulate values of yirep

Repeat above steps multiple times (say, 100 times, i=100)

Compare yrep to y

The key idea is: if our model is the data generating mechanism, could it generate data like ours?

Of course, there are still residuals and other traditional measures of model evaluation.

Interpeting our Model Results

We need to make sure we interpret the results in the context of:

Interpeting our Model Results

We need to make sure we interpret the results in the context of:

- How we quantified the story we want to tell.

Interpeting our Model Results

We need to make sure we interpret the results in the context of:

How we quantified the story we want to tell.

What information was collected.

Interpeting our Model Results

We need to make sure we interpret the results in the context of:

How we quantified the story we want to tell.

What information was collected.

What information was NOT collected

Interpeting our Model Results

We need to make sure we interpret the results in the context of:

How we quantified the story we want to tell.

What information was collected.

What information was NOT collectedWhat kinds of data can our model produce? And what does that imply about the real world?

Interpeting our Model Results

We need to make sure we interpret the results in the context of:

How we quantified the story we want to tell.

What information was collected.

What information was NOT collectedWhat kinds of data can our model produce? And what does that imply about the real world?

What structure did we not capture? What data not collected could affect the outcomes?

Historias con Impacto

Historias con Impacto

The biggest impact we can have is when we make discoveries about the world we live in.

Historias con Impacto

Something to always keep in mind: the stories we tell come from our perspectives, shaped by our experiences and driven by our personal choices. Being open and transparent about this is part of telling an impactful story.

Historias con Impacto

Something to always keep in mind: the stories we tell come from our perspectives, shaped by our experiences and driven by our personal choices. Being open and transparent about this is part of telling an impactful story.

Reproducible research:

Can anyone reproduce your workflow?

Do the results reproduce?

Data availability:

- Making the data available

Historias con Impacto

Something to always keep in mind: the stories we tell come from our perspectives, shaped by our experiences and driven by our personal choices. Being open and transparent about this is part of telling an impactful story.

Reproducible research:

Can anyone reproduce your workflow?

Do the results reproduce?

Data availability:

- Making the data available

Research that's accessible to everyone:

Why did the story turn out the way it did?

What was tried?

What failed?

Allowing insights into your research process.

Leyendas vs Historias

Las leyendas son entretenidas y parte de la cultura. Ejemplos: la llorona, el cucuy, la mano peluda, el callejón del beso. Pero no hay manera de verificar lo que actualmente occurrió.

Leyendas vs Historias

Las leyendas son entretenidas y parte de la cultura. Ejemplos: la llorona, el cucuy, la mano peluda, el callejón del beso. Pero no hay manera de verificar lo que actualmente occurrió.

Leyendas en la ciencia:

Declaraciones grandes

Datos inaccessible

Codigo inaccessible

Decisiones subjetivas no declaradas como tal

Leyendas vs Historias

Las leyendas son entretenidas y parte de la cultura. Ejemplos: la llorona, el cucuy, la mano peluda, el callejón del beso. Pero no hay manera de verificar lo que actualmente occurrió.

Leyendas en la ciencia:

Declaraciones grandes

Datos inaccessible

Codigo inaccessible

Decisiones subjetivas no declaradas como tal

Historias en la ciencia:

Declaraciones en base de la manera que se cuantificó el problema

Datos accessibles

Codigo accessible y funciona

Comentan sobre la perspectiva y subjetividad del análisis

Leyendas vs Historias

Las leyendas son entretenidas y parte de la cultura. Ejemplos: la llorona, el cucuy, la mano peluda, el callejón del beso. Pero no hay manera de verificar lo que actualmente occurrió.

Leyendas en la ciencia:

Declaraciones grandes

Datos inaccessible

Codigo inaccessible

Decisiones subjetivas no declaradas como tal

Historias en la ciencia:

Declaraciones en base de la manera que se cuantificó el problema

Datos accessibles

Codigo accessible y funciona

Comentan sobre la perspectiva y subjetividad del análisis

Sigamos adelante con menos leyendas y más historias.